Our Technology:

Built for the Next Generation of AI

Accelerate project delivery through our AI-powered development

environment and focus on innovation instead of struggling with

technical complexities and context limitations.

THE PROBLEM

“Fine-tuning LLMs is resource-heavy, doesn't handle variance well, and struggles to scale. Today's AI databases—like custom vector-based RAG systems—require excessive processing and lose context as data grows, unraveling results.”

What Our Technology

Delivers

By lowering the context burden on large language models (LLMs), Belva enables unprecedented scale and performance.

Massive context windows

that allow AI to retain and reason over significantly more information.

Fewer hallucinations

and more grounded outputs.

Improved task execution

even in complex or dynamic environments.

![]()

Greater autonomy

enabling AI to act with context-aware precision.

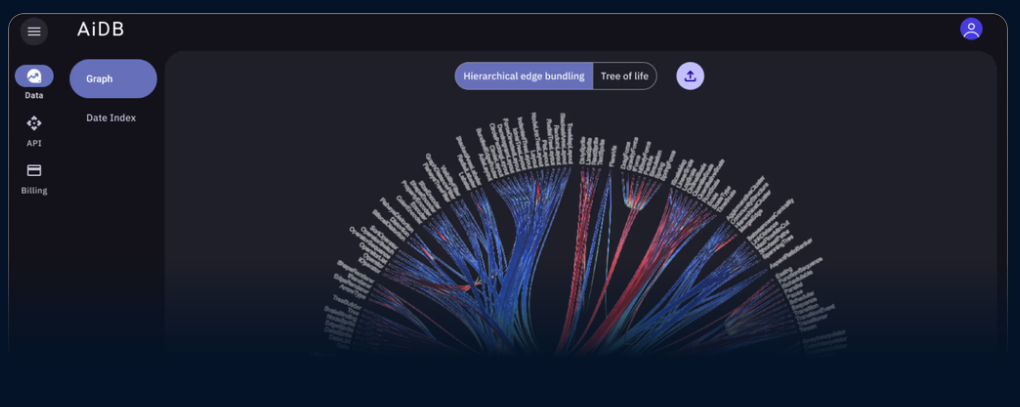

Powered By Belva Innovation

We’ve completely redefined how data is indexed and contextually mapped for LLMs. Our technology automatically creates relational maps that make your LLM smarter with each new input, using fewer context tokens and delivering better results—all without extra tuning!

Better Outputs, Fewer Tokens,

Built to Scale.

Belva’s core technology is designed to scale alongside your needs. Future advancements will expand our ability to handle enterprise-level complexity, support encrypted data structures, and continuously push the boundaries of secure, high-performance AI infrastructure.

Now, you can upload entire repositories and virtually eliminate context windows,

allowing your AI to generate code with enhanced understanding and precision. The future of AI-driven development is here.

Built by

Developers for Developers

Get a glimpse of why our team has been excited to create and innovate with Belva Architect.